Basics of Big Data - Building a Hadoop data warehouse

This is the 3rd part of a series of posts on Big Data. Read Part-1 (What is Big Data) and Part-2 (Hadoop).

Traditionally data warehouses have been built with relational databases as backbone. With the new challenges (3Vs) of Big Data, relational databases have been falling short of the requirements of handling

- New data types (unstructured data)

- Extended analytic processing

- Throughput (TB/hour loading) with immediate query access

The industry has turned to Hadoop as a disruptive solution for these very challenges.

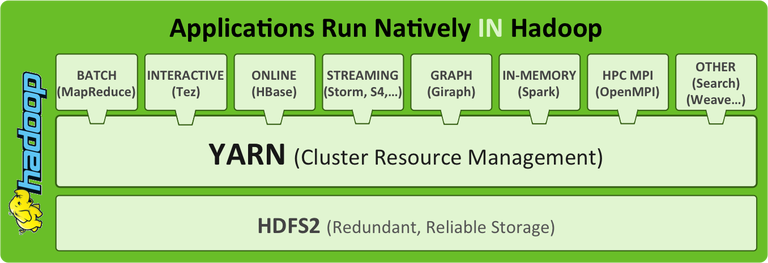

The new Hadoop architecture (courtesy Hortonworks):

Comparing the RDBMS and Hadoop data warehousing stack:

The heavy-lifting for any data warehouse is the ETL (Extract-Transform-Load) or ELT processing. The processing layers involved in the data warehousing ETL are different across conventional RDBMS and Hadoop.

The Hadoop USP: Exploratory analytics

All 3 layers in a conventional RDBMS are glued together into a proprietary bundle unlike Hadoop where each layer is independent and separate, allowing multiple access. Apart from these 3 layers, Hadoop provides an important advantage for exploratory BI in a single step from data load to query, which is not available in conventional RDBMS.

The data-load-to-query in one step involves:

-

Copy data into HDFS with ETL tool (e.g. Informatica), Sqoop or _Flume _into standard _HDFS _files (write once). This registers the metadata with HCatalog.

-

Declare the query schema in Hive or Impala, which doesn’t require data copying or re-loading, due to the schema-on-read advantage of Hadoop compared with schema-on-write constraint in RDBMS.

-

Explore with SQL queries and launching BI tools e.g. Tableau, BusinessObjects for exploratory analytics.

Other data warehousing use cases with Hadoop:

1. High performance DW using Parquet columnar file:

The Parquet file format has a columnar storage layout with flexible compression options and its layout is optimized for queries that process large volumes of data. It is accessible to multiple query and analysis applications and can be updated and have the schema modified. A high performance DW can be created by copying raw data from raw HDFS into Parquet files which can then be queried in real-time using Hive or Cloudera Impala.

2. Platform for transforming data or ETL:

The (extract-transform-load) ETL or ELT (extract-load-transform) use case for Hadoop is well established. The reasons why Hadoop is a popular ETL platform are:

- Hadoop itself is a general-purpose massively parallel processing (MPP) platform.

- Hadoop’s NoSQL database - it’s flexible schema-on-read offers a relaxed alternative to the rigid strongly-typed schema-on-write model of a relational data warehouse.

- Hadoop is highly cost-effective (up to 50 to 100 times cheaper) compared to conventional relational data warehouse on a per-Terabyte basis, due to its commodity hardware distributed architecture (low-cost scale-out) compared to the relatively high-end infrastructure required for conventional systems (high-cost scale-up).

- Higher performance on a per-core basis (CPU processing power) allows Hadoop to beat most conventional ETL systems.

- Most ETL vendors already market versions of their software which leverage Hadoop, whether this is through using Hadoop connectors (e.g. Oracle Data Integrator) or ETL-optimized libraries for MapReduce (e.g. Syncsort DMX-h).

Most such ETL tools allows existing developers to build Hadoop ETL without having to code or script in MapReduce with Java or Pig. Several vendors also provide ETL-on-Hadoop automation, and a rich user experience (UX) with drag-and-drop design of jobs or workflows, and even native integration taking advantage of (Yet Another Resource Negotiator) YARN in Hadoop v2.0

3. Advanced analytics:

Predictive analytics, statistics and other categories of advanced analytics produce insights that traditional BI approaches like query and reporting may find unlikely to discover. Advanced analytics platforms and tools become more important when managing big data volumes due to issues of scalability and performance.

Strategic applications, like data mining, machine learning and analytics on new types of data, including unstructured data, with much improved performance due to Hadoop’s distributed architecture also allow for new types of integrated analysis previously not possible on relational database platforms. Several newer tools and vendors support using statistical packages and languages like R (and SAS or SPSS) for big data analytics.

4. DW offloading:

Due to low storage costs (50 to 100 times less on a per-TB basis), Hadoop is well suited to keeping data online for an indefinite period of time. This is also known as a data lake or an enterprise data hub, where various types of data can be kept till newer use cases are discovered for such data. Such storage also allows for “active archival” of infrequently used data from (Enterprise Data Warehouse) EDW, thus allowing only the most valuable (and usually most recent) data to be kept in the EDW.

With ELT loads driving up to 80% of database capacity, Hadoop can also be used as a staging area for data preparation and ELT to allow offloading data processing from the EDW

With the big data deluge, and its 3 equally challenging dimensions of volume, velocity and variety - existing conventional platforms are finding it difficult to meet all of an organization’s data warehousing needs along with ETL processing times and availability SLAs. The balance of power keeps tilting towards Hadoop with newer tools and appliances extending the capabilities of Hadoop and with its superior price/performance ratio, building a data warehouse leveraging Hadoop needs to be given serious consideration.

Read the series on Big Data: Part-1 : Basics, Part-2 : Hadoop, Part-3 : Hadoop data warehouse and Part-4 : NoSQL

comments powered by Disqus